Friday, June 27, 2014

Thursday, June 26, 2014

SGA_MAX_SIZE & SGA_TARGET / MEMORY_TARGET & MEMORY_MAX_TARGET

As and when Oracle release new version of database, DBA gets

new bunch of features.Before Oracle 9i, DBA has to maintain the whole bunch of

memory parameters like DB_BLOCK_BUFFERS, SHARED_POOL_SIZE, set of PGA

parameters *_AREA_SIZE, LOG_BUFFER and so on. Real complexity before 9i is

assigning correct values to *_AREA_SIZE (PGA) parameters as these values is not

for whole instance; it is for EACH oracle user process(Oracle user process

count can grow upto maximum of PROCESSES settings).

SGA_TARGET and SGA_MAX_SIZE

In 9i, Oracle introducted a new parameters called

PGA_AGGREGATE_TARGET and WORKAREA_SIZE_POLICY which helps users to assign a

single value for the whole instance's PGA and Oracle instance itself will do

self mangement for PGA memory. In earlier version, DBA has to assign value for

_AREA_SIZE parameters which assign equal size for all sessions.

Oracle 10g has gone to next level from 9i wherein it

automates the memory management of whole SGA. Instead of DB_CACHE_SIZE, SHARED_POOL_SIZE,

JAVA_POOL_SIZE, LARGE_POOL_SIZE and LOG_BUFFER parameter; user has to manage

just SGA_TARGET and SGA_MAX_SIZE

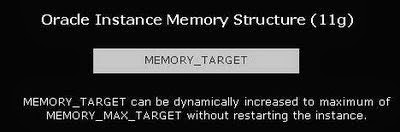

Oracle 11g has gone to the next level from 10g of automatic

memory management where in the whole instance can be controlled by

initialization parameter MEMORY_TARGET and a maximum memory size initialization

parameter MEMORY_MAX_TARGET. Oracle Database then tunes to the target memory

size, redistributing memory as needed between the system global area (SGA) and

the instance program global area (instance PGA).

SGA_TARGET and SGA_MAX_SIZE

*SGA_MAX_SIZE is the largest amount of memory that will be

available for the SGA in the instance and it will be

allocated from memory. You do not have to use it all, but it will be

potentially wasted if you set it too high and don’t use it. It is not a dynamic

parameter. Basically it gives you room for the Oracle instance to grow.

*SGA_TARGET is actual memory in use by the

current SGA. This parameter is dynamic and can be increased up to the value of

SGA_MAX_SIZE.SGA_MAX_SIZE and SGA_TARGET both are the parameter are used to

change the SGA SIZE.SGA_MAX_SIZE sets the maximum value for sga_target.

*SGA_TAGET is 10G feature used to change the sga size

dynamically .it specifies the total amount of SGA memory available to an

instance.

This feature is called Automatic Shared Memory

Management. With ASMM, the parameters java_pool_size, shared_pool_size,

large_pool_size and db_cache_size are affected.

*The

SGA_MAX_SIZE parameter is the max allowable size to resize the SGA Memory area

parameters. If the SGA_TARGET is set to some value then the Automatic Shared

Memory Management (ASMM) is enabled, the SGA_TARGET value can be adjusted up to

the SGA_MAX_SIZE parameter, not more than SGA_MAX_SIZE parameter value.

MEMORY_TARGET and

MEMORY_MAX_TARGET

you

can manage SGA and PGA together rather than managing them separately.

If

you set SGA_TARGET, SGA_MAX_SIZE and PGA_AGGREGATE_TARGET to 0 and set

MEMORY_TARGET (and optionally MEMORY_MAX_TARGET) to non zero value, Oracle will

manage both SGA components and PGA together within the limit specified by you.

If

MEMORY_TARGET is set to 1024MB, Oracle will manage SGA and PGA components

within itself.

If

MEMORY_TARGET is set to non zero value:

*SGA_TARGET,

SGA_MAX_SIZE and PGA_AGGREGATE_TARGET are set to 0, 60% of memory mentioned in

MEMORY_TARGET is allocated to SGA and rest 40% is kept for PGA.

*If

SGA_TARGET and PGA_AGGREGATE_TARGET are set, they will be considered the

minimum values for the sizes of SGA and the PGA respectively (But sum of

SGA_TARGET and PGA_AGGREGATE_TARGET should be less than or equal to

MEMORY_TARGET).

*SGA_TARGET

is set to non zero value and PGA_AGGREGATE_TARGET is not set. Still these

values will be autotuned and PGA_AGGREGATE_TARGET will be initialized with

value of (MEMORY_TARGET-SGA_TARGET).

*PGA_AGGREGATE_TARGET

is set and SGA_TARGET is not set. Still both parameters will be autotunes.

SGA_TARGET will be initialized to a value of

(MEMORY_TARGET-PGA_AGGREGATE_TARGET).Wednesday, June 25, 2014

ORA-00445: background process "W001" did not start after 120 seconds

Cause

*

Recent linux kernels have a feature called Address Space Layout Randomization

(ASLR).

*

ASLR is a feature that is activated by default on some of the newer linux

distributions.

*

It is designed to load shared memory objects in random addresses.

*

In Oracle, multiple processes map a shared memory object at the same address

across the processes.

*

With ASLR turned on Oracle cannot guarantee the availability of this shared

memory address.

This

conflict in the address space means that a process trying to attach a shared

memory object to a specific address may not be able to do so, resulting in

a failure in shmat subroutine.

However,

on subsequent retry (using a new process) the shared memory attachment may

work. The result is a “random” set of failures in the alert log.

Solution

*

It should be noted that this problem has only been positively diagnosed in

Redhat 5 and Oracle 11.2.0.2.

*

It is also likely, as per unpublished BUG:8527473, that this issue will

reproduce running on Generic Linux platforms

running any Oracle 11.2.0.x. or 12.1.0.x

on Redhat/OEL kernels which have ASLR.

*

This issue has been seen in both Single Instance and RAC environments.

You

can verify whether ASLR is being used as follows:

#

/sbin/sysctl -a | grep randomize

kernel.randomize_va_space

= 1

If

the parameter is set to any value other than 0 then ASLR is in use. On Redhat 5

to permanently disable ASLR. Add/modify this parameter in /etc/sysctl.conf

-

kernel.randomize_va_space=0

-

kernel.exec-shield=0

You

need to reboot for kernel.exec-shield parameter to take effect. Note that both

kernel parameters are required for ASLR to be switched off.

There

may be other reasons for a process failing to start, however, by switching ASLR

off, you can quickly discount ASLR being the problem. More and more issues

are being identified when ASLR is in operation.

References:

Oracle Transaction

Now that we have looked at the Oracle

architecture, we will look at a sample transaction in detail to see how

everything works together. This sample transaction is shown in Figure

The

following covers a basic transaction inside Oracle:

1.

A user logs starts SQL*Plus and enters a username, password, and database name

(referred to as a connect string). On the client side, Oracle finds the

database name in tnsnames.ora. Using the specified IP address, port number, and

connect string, the user process establishes a connection with the database

server.

2.

The Oracle listener process on the database server receives the user process

connection. It looks for the databases in listener.ora and routes the user

process to the specified database. At this point we are assuming a dedicated

(not MTS) connection.

3.

A new server process inside the database is spawned and establishes contact

with the user process. At this stage the listener process is no longer

involved. After password authentication, the user process is connected.

4.

The user process issues a SQL statement to update a row of data in a table.

This statement goes across the network to the dedicated server process.

5.

Information inside the PGA for the server process is updated to reflect the new

SQL statement.

6.

The server process runs a quick hash algorithm on the statement and receives a

value. It then scans the shared SQL area for any statements with the same hash

value. For this example, it does not find a matching hash value.

7.

The server process scans and finds a free area within the shared SQL area to

work. It parses the user’s SQL statement and checks the syntax. Oracle verifies

that the table exists in the data dictionary and verifies the user’s object-level

permissions on the table being modified. Then it looks at the statement and the

stored statistics it has about the table and any indexes to develop an

execution plan. Oracle then issues an exclusive lock on the row of data and

attempts to execute the statement. Oracle reads the current System Change

Number (SCN) for the system and uses this value to maintain consistency

throughout

the transaction.

8.

The server process scans the database buffer cache to see if the data block is

already cached. In our example the server process does not find a copy of the

block in the buffer cache, so it must read in a copy.

9.

A scan of the database buffer cache finds a consistent data block buffer that

can be reused. The server process follows the prescribed execution plan and

retrieves the block containing the row to be modified. It overwrites the buffer

cache buffer with the new block. The block is marked as being at the MRU end of

the Least Recently Used list. In Oracle 8i it is believed that a block will be

placed in the middle of the LRU list using Mid Point Insertion, but there isn’t

enough public information to confirm this.

10.

A slot in the rollback segment header is briefly acquired, and space in a rollback

segment extent is found. The block of this rollback segment extent is moved to

the database buffer cache in a similar manner as in step 8. An UPDATE statement

to undo the user’s UPDATE statement is generated and placed in the rollback

segment. Undo for the update to the row’s index is also generated and placed in

the rollback segment.

11.

The row of data is actually modified in the database buffer cache memory. Index

and rollback

segment

buffers are also inside the buffer cache.

12.

The server process finds space and writes the change to the redo log buffer.

This includes both the modified data and the contents of the rollback segment.

13.

At this stage the user who issued the statement can see the change with a

SELECT statement. Any other user issuing a SELECT statement will see the row as

it was before step 4. The block containing the modified row is now considered

dirty because it has been modified but not yet committed. If another user attempts

to issue a statement to modify the same row, that session will seem to hang because

it is waiting on the first user to release the row exclusive lock acquired in

step 6.

14.

The user types the COMMIT command at the SQL*Plus prompt and presses Enter.

This is considered an explicit commit and is a signal to Oracle to make

permanent any changes made by the user. What if the user types the word EXIT at

the SQL*Plus prompt and presses Enter? This is an implicit commit because the

user is existing normally. The changes to the data will be made permanent.

15.

The Oracle server process receives the instruction to commit the row update. A

unique System Change Number is assigned to the transaction in the rollback

segment transaction table and in the redo log buffer. LGWR writes everything in

the redo log buffer to the active online redo log file(s). Once the data is

written to the redo log file(s) and Unix has confirmed the write to be

successful, Oracle considers the transaction complete and the change permanent.

If a database crash were to occur, the changes to the data would still be

recovered.

16.

DBWR will eventually write every dirty block in the buffer cache to disk, but

that may not necessarily happen yet. In fact, the modified blocks may already

have been written to disk. This will occur at the normal time when DBWR writes.

A user commit does not force DBWR to write. The modified blocks may still reside

in the database buffer cache, but the transaction is considered complete by

Oracle because LGWR successfully wrote to the online redo log.

17.

The row-level lock held by the user is released. The user receives a message

stating the commit was successful.

18.

The other statement (in step 13) waiting to update the row will now receive an

exclusive row lock, and the steps starting at step 6 may occur.

19.

The first user issues an EXIT statement in SQL*Plus. This causes any new DML

statements to be committed. Next the Oracle server process and the

corresponding user process terminate. Memory

resources

and any locks held in the user’s PGA/UGA are released back to the system.

Note

that this was discussed only at the Oracle level and that we did not yet

mention how the memory and disk are accessed at the Unix level. Relatively simple

transactions such as this occur very frequently and involve many steps. This

should underscore the need for a highly tuned system because any inefficiency

could result in noticeable performance problems.

HugePages for Oracle on Linux

From

Oracle documentation, here are some major advantages of using HugePages.

* RAM is managed in 4k pages in 64 bit

Linux. When memory sizes were limited, and systems with more than 16G RAM

were rare, this was not a problem. However, as systems get more memory, the

number of memory pages increased and become less manageable. Hugepages

make managing the large amounts of memory available in modern servers much less

CPU intensive.

* HugePages is crucial for faster Oracle database performance on Linux

if you have a large RAM and SGA.

* HugePages are not swappable. Therefore there is no page-in/page-out mechanism

overhead. HugePages are universally regarded as pinned.

Thus Oracle SGA memory must either be all hugepages are no hugepages. If you allocate hugepages for Oracle, and don’t allocate enough for the

entire SGA, Oracle will not use any hugepage memory. If there is not

enough non-hugepage memory, your database will not start. Finally,

enabling hugepages will require a server restart, so if you do not have the

ability to restart your server, do not attempt to enable hugepages.

Implementing HugePages has become common practice with Oracle 11g and is

fairly well documented in MOS Note 361468.1.

The basics steps are as follows:

* Set the memlock

ulimit for the oracle user.

* Disable Automatic Memory Managment if necesary as it is incompatible with HugePages.

* Run the Oracle supplied hugepages_settings.sh script to calculate the recommended value for the vm.nr_hugepages kernel parameter.

* Edit /etc/sysctl.conf with the vm.nr_hugepages with the recommeneded setting.

* Reboot the server

* Disable Automatic Memory Managment if necesary as it is incompatible with HugePages.

* Run the Oracle supplied hugepages_settings.sh script to calculate the recommended value for the vm.nr_hugepages kernel parameter.

* Edit /etc/sysctl.conf with the vm.nr_hugepages with the recommeneded setting.

* Reboot the server

If you have Oracle Database 11g or later, the default

database created uses the Automatic Memory Management (AMM) feature which is

incompatible with HugePages. Disable AMM before proceeding. To disable, set the

initialization parameters MEMORY_TARGET and MEMORY_MAX_TARGET to 0 (zero)

The database we were working with was 10g. As it turns

out, there are some differences between Oracle 10 and 11, mainly that there is no HugePage

logging in the alert log on version 10. See MOS Note: 1392543.1

Another noticable difference is the fact that the initialization parameter use_large_pages was not added until version 11.2.0.2. In the event that you have multiple databases on one machine, this parameter allows you to control which databases use HugePages. In addition, it can prevent a database from starting up if not enough HugePages can be allocated at instance startup. See “use_large_pages to enable HugePages in 11.2 [ID 1392497.1]” for more detailed info on the values for this parameter.

There are both Oracle

database settings and Linux OS settings that must be adjusted in order to

enable hugepages. The Linux and oracle settings of concern are below:

Linux OS settings:

/etc/sysctl.conf:

- vm.nr_hugepages

- kernel.shmmax

- kernel.shmall

/etc/security/limits.conf:

- oracle soft memlock

- oracle hard memlock

Oracle Database spfile/init.ora:

SGA_TARGET

SGA_MAX_SIZE

MEMORY_TARGET

MEMORY_MAX_TARGET

USE_LARGE_PAGES

First, calculate the Linux OS settings.

Kernel.shmmax should be set to the size

of the largest SGA_TARGET on the server plus 1G, to account for other

processes. For a single instance with 180G RAM, that would be 181G.

Kernel.shmall should be set to the sum of

the SGA_TARGET values divided by the pagesize. Use ‘getconf pagesize’

command to get the page size. Units are bytes. The standard

pagesize on Linux x86_64 is 4096, or 4k.

Oracle soft memlock and oracle hard

memlock

should be set to slightly less than the total memory on the server, I chose

230G. Units are kbytes, so the number is 230000000. This is the

total amount of memory Oracle is allowed to lock.

Now for the hugepage setting itself: vm.nr_hugepages is the

total number of hugepages to be allocated on the system. The number of

hugepages required can be determined by finding the maximum amount of SGA

memory expected to be used by the system (the SGA_MAX_SIZE value normally, or

the sum of them on a server with multiple instances) and dividing it by the

size of the hugepages, 2048k, or 2M on Linux. To account for Oracle process overhead,

add five more hugepages. So, if we want to allow 180G of hugepages, we

would use this equation: (180*1024*1024/2048)+5. This gives us 92165

hugepages for 180G. Note: I took a shortcut in this calculation, by using

memory in MEG rather than the full page size. To calculate the number in

the way I initial described, the equation would be: (180*1024*1024*1024)/(2048*1024).

In order to allow the Oracle database to use up to 180G for the

SGA_TARGET/SGA_MAX_SIZE, below are the settings we would use for the OS:

/etc/security/limits.conf

oracle soft memlock 230000000

oracle hard memlock 230000000

/etc/sysctl.conf

vm.nr_hugepages = 92165

kernel.shmmax = 193273528320+1g =

194347270144

kernel.shmall = 47448064

In the Oracle database there is a new setting in 11gR2. This is

USE_LARGE_PAGES, with possible values of ‘true’, ‘only’, and ‘false’.

True is the default and current behavior, ‘False’ means never use hugepages,

use only small pages. ‘Only’ forces the database to use hugepages.

If insufficient

pages are available the instance will not start. Regardless of this

setting, it must use either all hugepages or all smallpages. According to

some blogs, using this setting is what allows the MEMORY_MAX_TARGET and

MEMORY_TARGET to be used with hugepages. As I noted above, I have not

verified this with a Metalink note as yet.

Next,

set SGA_TARGET and SGA_MAX_SIZE to

the desired size. I generally recommend setting both to the same size. Oracle recommends explicitly setting the MEMORY_TARGET and

MEMORY_MAX_TARGET to 0 when enabling hugepages. So these are the values

in the spfile that we change:

USE_LARGE_PAGES=only

SGA_TARGET=180G

SGA_MAX_SIZE=180G

MEMORY_MAX_TARGET=0

MEMORY_TARGET=0

In

order to verify that hugepages are being used, run this command: ‘cat /proc/meminfo | grep Huge’.

It

will show HugePages_Total, HugePages_Free, and HugePages_Rsvd. The

HugePages_Rsvd value is the number of hugepages that are in use.

Note

that this example uses Linux hugepage size of 2M (2048k). On Itanium

systems the hugepage size is 256M.

These

instructions should allow you successfully implement huge pages in Linux.

Note that everything would be the same for Oracle 10gR2, with the exception

that the USE_LARGE_PAGES parameter is unavailable.

References:

Tuesday, June 24, 2014

Data Guard Protection Modes

Maximum Protection

1. No data loss

2. Redo has to be

written to both Primary redo logs and standby redo logs (of atleast one standby database) before transaction commits

3. Primary database

shuts down if redo stream is prevented to write at standby redo logs of atleast

one standby database

4. Configure standby

redo logs on at least one standby database

5. Attribute to use in

log_archive_dest_n : LGWR, SYNC and AFFIRM for at least one standby DB

Maximum Availability

1. Redo has to be

written to both Primary redo logs and standby redo logs (of atleast one standby

database) before transaction commits

2. If redo stream is

prevented to write at standby redo logs of at least one standby database then

Primary database does not shuts down unlike Maximum protection mode, instead

primary database operates in Maximum Performance mode.

4. Primary database

automatically resumes to operate in maximum availability mode once all gaps are

resolved.

5. Configure standby

redo logs on at least one standby database

6. Attribute to use in

log_archive_dest_n : LGWR, SYNC and AFFIRM for at least one standby DB

Maximum Performance

1. Default mode

2. Asynchronous redo

data is written to at least one standby database

3. Attributes on

log_archive_dest_n to set either LGWR and ASYNC or ARCH for standby

DB destination

LGWR SYNC and ASYNC in Oracle DataGuard

Oracle Data Guard redo log transport

offers synchronous log transport mode (LogXptMode = 'SYNC') or asynchronous log

transport mode (LogXptMode = 'ASYNC'). The difference is all about when

the COMMIT happens .

LogXptMode = ('SYNC'):

As the name implies, SYNC mode synchronizes the primary with the standby

database and all DML on the primary server will NOT be committed until the logs

have been successfully transported to the standby servers. The

synchronous log transport mode is required for the Maximum Protection and

Maximum Availability data protection modes.

LogXptMode =

('ASYNC'): Conversely, asynchronous mode (ASYNC) allows updates (DML) to be

committed on the primary server before the log file arrives on the standby

servers. The asynchronous log transport mode is required for the Maximum

Performance data protection mode.

As

you know, for every committed transaction at the primary database, Oracle

generates and writes records to a local online log file.

If

you choose REAL TIME redo apply, Data Guard transport services transmit the

redo directly from the primary database log buffer to the standby database(s)

where it is written to a standby redo log file locally.

This

is highly efficient, it bypasses all IO generating processes. All redo travel

in memory structures of database.

This

method offers two types of replication methods: synchronous and asynchronous.

SYNCHRONOUS

REDO TRANSPORT (SYNC) STORY (ZERO DATA LOSS)

Before

11GR2 :

1.

PRIMARY DATABASE generates some redo data.

2.

It waits the local log file write to be completed before transmitting redo from

memory to the remote standby.

3.

Then, it sends REDO to STANDBY DATABASE.

4.

PRIMARY DATABASE waits until STANDBY DATABASE receives that redo data and even

writes to STANDBY REDO LOG FILES.

5.

If these conditions are satisfied, PRIMARY DATABASE acknowledges successful

commit to application.

6.

This provides guarantee of zero data loss in case of any failure happens.

With

11GR2 :

1.

PRIMARY DATABASE generates some redo data (again)

2.

PRIMARY DATABASE transmits redo to the remote standby in parallel with the

local online log file write at the primary database.

ASYNCHRONOUS

REDO TRANSPORT (ASYNC) STORY (MAXIMUM PERFORMANCE)

1.

PRIMARY DATABASE generates some redo data.

2.

PRIMARY DATABASE acknowledges successful commit to application without waiting

STANDBY DATABASE's acknowledgment.

3.

PRIMARY DATABASE ships directly from the primary log buffer (instead of from

disk) to STANDBY DATABASE.

4.

PRIMARY DATABASE's performance is at maximum, but there is no guarantee that a

small amount of data loss.

You

can use these options at PRIMARY DATABASE's configuration :

LOG_ARCHIVE_DEST_2='SERVICE=MYSTANDBY

LGWR ASYNC VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)

DB_UNIQUE_NAME=MYUNIQUENAME'

So, DATA GUARD doesn't need to wait local log files to be written on anymore.

This eliminates the round-trip time

required for I/O to complete at the standby database.

Archivelog Mode vs Noarchivelog Mode

NOARCHIVELOG mode:

1. The

Redo Log Files are overwritten each time a log switch occurs, but the files are

never archived.

2. When a Redo Log File

(group) becomes inactive it is available for reuse by LGWR.

3. This mode protects a

database from instance failure, but NOT from media failure.

4. In the event of media

failure, database recovery can only be accomplished to the last full backup of the database

5. You cannot perform

tablespace backups in NOARCHIVELOG mode.

6. When the last redo log is written, the

database begins overwriting the first redo log again

7. Here we

can only go for cold backup.Generally

in this mode complete recovery is not possible

e.g., Full Database

Backup - NOARCHIVELOG Mode

SQL> BACKUP DATABASE;

ARCHIVELOG mode

–

1. Full On-line Redo Log

Files are written by the ARCn process to specified archive

locations, either disk or tape – you

can create more than one archiver process to improve performance.

2. A database control file

tracks which Redo Log File groups are available for reuse (those that have been archived).

3. The DBA can use the last

full backup and the Archived Log Files to recover the database.

4. A Redo Log File that has

not been archived cannot be reused until the file is archived – if the database

stops awaiting archiving to complete, add an additional Redo Log Group.

5. When you are working with

RMAN, ensure that the database runs in ARCHIVELOG mode.

6. It requires additional disk space to store archived log files.

7. It is important that the directory containing

the archived log files doesn’t become full because if it does, the ARCH thread

wouldn’t be able to archive the redo log files. The DBA has to continuously

monitor the used-space percentage in the archive directory.

8. In archivelog mode cold and hot backups are possible. We can recover our

database upto the last point of failure.

e.g., Full Database Backup - ARCHIVELOG Mode

SQL> BACKUP DATABASE PLUS ARCHIVELOG;

Monday, June 23, 2014

How to rename a database in Oracle

Steps

to change DBID and DBNAME:

Source

database name is DCPROC and we will convert it to DRPROC.

Step

1. Mount the database after a clean shutdown:

[oracle@netmindtwcint ~]$ export

ORACLE_SID=DCPROC

[oracle@netmindtwcint ~]$ sqlplus sys as

sysdba

SQL> shutdown immediate;

SQL> startup mount;

Step

2. Invoke the DBNEWID utility (nid) specifying the new DBNAME from the command

line using a user with SYSDBA privilege:

1. TO CHANGE ONLY DB NAME

% nid TARGET=SYS DBNAME=LSAUDI SETNAME=YES

2. TO CHANGE ONLY DBID

%

nid TARGET=SYS

[oracle@mumvfspocsmdb log]$ nid TARGET=SYS

DBNAME=DRPROC

DBNEWID: Release 11.2.0.3.0 - Production on

Fri Oct 4 14:41:21 2013

Copyright (c) 1982, 2011, Oracle and/or its

affiliates. All rights reserved.

Password:

Connected to database DCPROC

(DBID=2392917474)

Connected to server version 11.2.0

Control Files in database:

/oracle_backup/oracle/ora_control1.ctl

/oracle_backup/oracle/flash_recovery_area/control02.ctl

Change database ID and database name DCPROC

to DRPROC? (Y/[N]) => y

Proceeding with operation

Changing database ID from 2392917474 to

1776249945

Changing database name from DCPROC to DRPROC

Control File /oracle_backup/oracle/ora_control1.ctl - modified

Control File /oracle_backup/oracle/flash_recovery_area/control02.ctl -

modified

Datafile /oracle_backup/oracle/system01.db - dbid changed, wrote new

name

Datafile /oracle_backup/oracle/sysaux01.db - dbid changed, wrote new

name

Datafile /oracle_backup/oracle/undotbs01.db - dbid changed, wrote new

name

Datafile /oracle_backup/oracle/saudidata1.db - dbid changed, wrote new

name

Datafile /oracle_backup/oracle/temp01.db - dbid changed, wrote new name

Control File /oracle_backup/oracle/ora_control1.ctl - dbid changed, wrote

new name

Control File /oracle_backup/oracle/flash_recovery_area/control02.ctl -

dbid changed, wrote new name

Instance shut down

Database name changed to DRPROC.

Modify parameter file and generate a new

password file before restarting.

Database ID for database DRPROC changed to

1776249945.

All previous backups and archived redo logs

for this database are unusable.

Database is not aware of previous backups

and archived logs in Recovery Area.

Database has been shutdown, open database

with RESETLOGS option.

Succesfully changed database name and ID.

DBNEWID - Completed succesfully.

Step

3 Create a new password file:

[oracle@netmindtwcint dbs]$ orapwd file=$ORACLE_HOME/dbs/orapwDRPROC

password=Oracle entries=10

Step

4 Rename the initFILE to match the new DBNAME.

Make

new init.ora file from existing init.ora file and change db_name parameter from

DCPROC to DRPROC manually

change *.db_name='DCPROC' to *.db_name='DRPROC' in initDRPROC.ora file.

[oracle@netmindtwcint dbs]$ cp

initDCPROC.ora initDRPROC.ora

SQL> alter system set db_name='DRPROC'

scope=memory;

OR

Rename

the SPFILE to match the new DBNAME.

Make

new spfileDRPROC.ora file and from existing spfileDCPROC.ora file and change

db_name parameter from DCPROC to DRPROC commandly

[oracle@netmindtwcint dbs]$ cp spfileDCPROC.ora

spfileDRPROC.ora;

SQL> alter system set db_name='DRPROC'

scope=spfile

Step

5 Configure listener & tnsnames files

Change

the $ORACLE_HOME/network/admin/tnsnames.ora file wherever it has the old db

name.

If

there is a static registration of the database in the listener.ora file then

change the database name in the following file $ORACLE_HOME/network/admin/listener.ora.

Step

6. Open the database with Resetlogs option:

[oracle@netmindtwcint dbs]$ sqlplus sys as

sysdba

SQL*Plus: Release 11.2.0.2.0 Production on

Fri Apr 27 15:00:03 2012

Copyright (c) 1982, 2010, Oracle. All rights reserved.

Enter password:

Connected to an idle instance.

SQL> startup mount

ORACLE instance started.

Total System Global Area 535662592 bytes

Fixed Size 1345376 bytes

Variable Size 167774368 bytes

Database Buffers 360710144 bytes

Redo Buffers 5832704 bytes

Database mounted.

SQL> alter database open resetlogs;

Database altered.

Subscribe to:

Comments (Atom)